Brief History of ADAS (1970s → Present)

1970s–1990s: Control-Oriented Safety Systems

- 1971: Japan’s Honda develops an early form of lane-keeping aid in experimental research vehicles.

- 1978: Anti-lock Braking System (ABS) becomes the first commercial ADAS, launched by Mercedes-Benz in the S-Class, allowing controlled braking to prevent skidding.

- 1995: Electronic Stability Control (ESC) is introduced, combining ABS and traction control to help prevent under- and over-steering.

2000s: Sensor-Based Assistive Features Emerge

- Adaptive Cruise Control (ACC) uses radar to maintain a safe distance from vehicles ahead.

- Lane Departure Warning (LDW) systems begin to appear, relying on cameras to detect lane markings.

- Blind Spot Detection and Parking Assist systems become standard in premium models.

2010s: Shift Toward Perception and Decision-Making

- The fusion of camera, radar, and LiDAR is becoming more common.

- Automakers introduce Automatic Emergency Braking (AEB) and Traffic Sign Recognition, powered by basic computer vision.

- Tesla’s Autopilot and Mobileye’s vision-based systems lead semi-autonomous driving efforts.

2020s–Present: AI-Driven ADAS

- Deep learning models power perception modules for object detection, scene segmentation, and path planning.

- Multimodal fusion combines sensor inputs to form unified environmental models.

- AI models begin to predict human behavior (e.g., lane changes, braking intent).

- Research includes reinforcement learning, intention prediction, and real-time 3D mapping.

Introduction to ADAS and AI

AI Techniques in ADAS

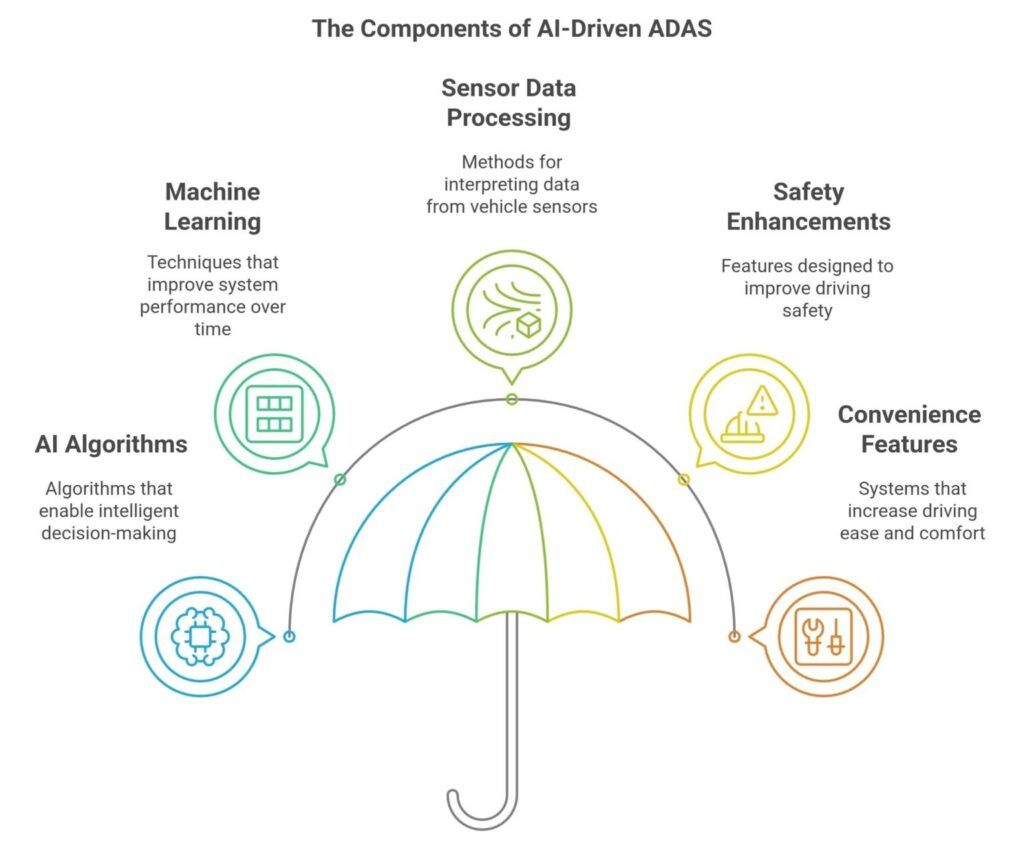

ADAS uses various AI techniques to interpret and respond effectively to the driving environment. Key methodologies include:

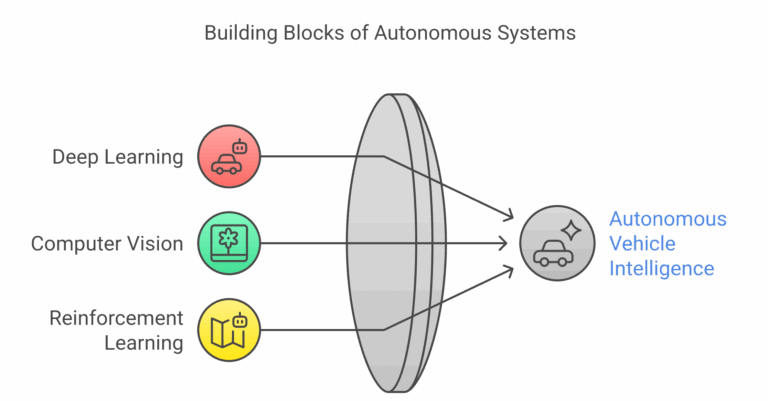

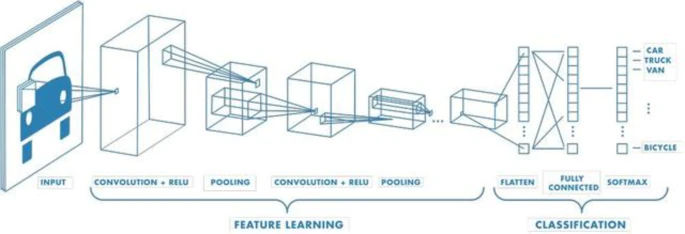

- Deep Learning: Deep learning, particularly through convolutional neural networks (CNNs), is widely used for image and pattern recognition in ADAS. It enables the detection and classification of objects such as pedestrians, vehicles, traffic signs, and lane markings—often as part of a computer vision pipeline.

- Computer Vision: Computer vision, powered by deep learning, interprets visual data from cameras and other sensors to build a semantic understanding of the vehicle’s environment. This includes detecting road features, tracking dynamic objects, and supporting decision-making in real time.

- Reinforcement Learning (RL): This approach allows systems to learn from their experiences by receiving feedback from their actions, improving decision-making capabilities over time. While promising, RL is currently mostly explored in simulation and limited pilot settings due to the high safety and verification requirements for on-road deployment. As a result, mass-production ADAS currently relies more on supervised and imitation learning techniques, which offer greater predictability and traceability.

How AI Enhances ADAS Functionality: A Modular Approach

- Adaptive – AI-based systems can learn from vast datasets and improve over time.

- Real-time Processing of Complexity – AI can interpret intricate sensor data and generate appropriate responses for complex and dynamic real-time driving scenarios.

- Predictive (in specific contexts) – Machine learning models can anticipate certain short-term behaviors (e.g., driver intent, pedestrian trajectories) and road conditions, moving beyond purely reactive responses.

So, why do we need AI-driven ADAS?

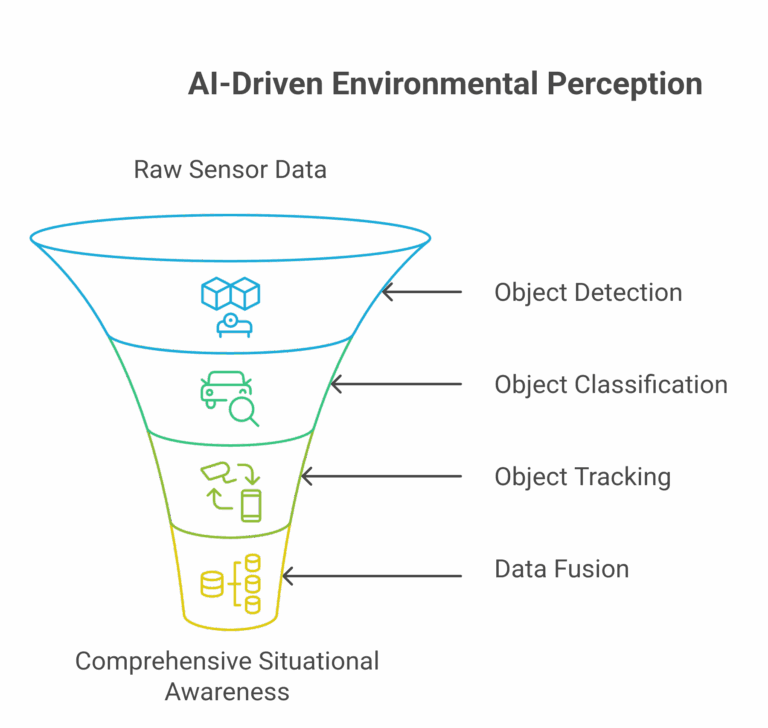

1. Perception: Understanding the World

AI’s Value Add:

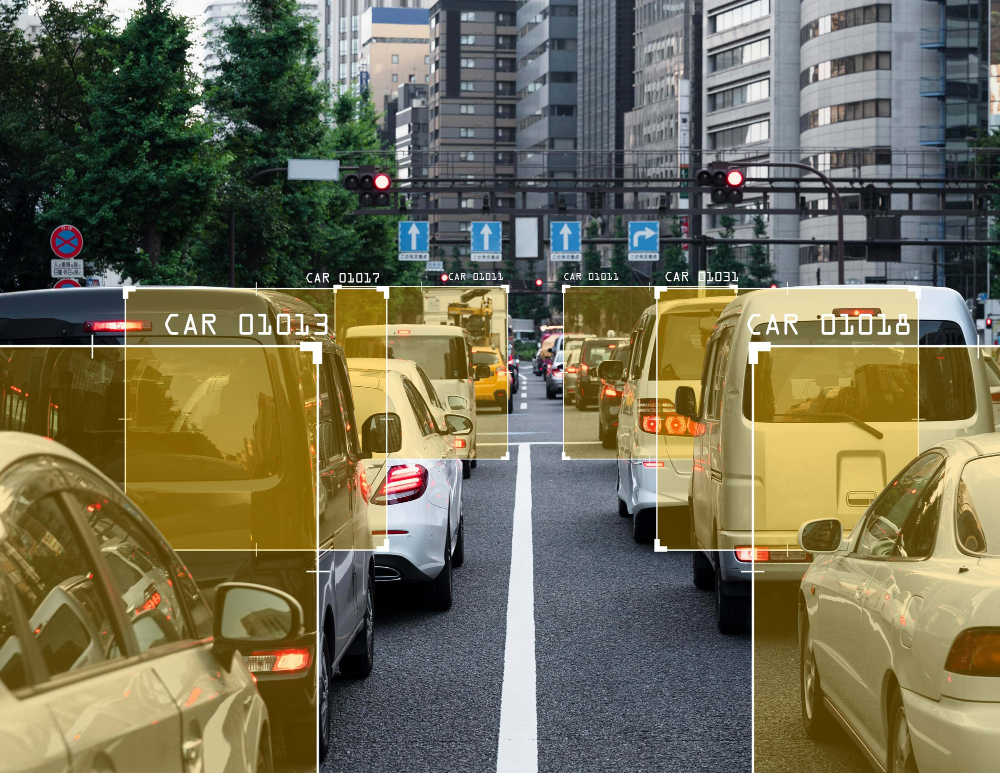

- Robust Object Detection & Classification (CNNs): Instead of hand-engineered features, deep learning models (e.g., CNNs like YOLO, Faster R-CNN) learn complex patterns directly from vast datasets, leading to far more accurate and robust detection of diverse objects under varying conditions (lighting, weather, occlusion). This includes capabilities like Computer Vision for Object Detection as discussed.

- Semantic & Instance Segmentation: AI allows for pixel-level understanding of the scene (e.g., segmenting road, sky, buildings, drivable areas, individual objects). This provides a rich, fine-grained environmental model crucial for safe navigation.

- Multi-modal Sensor Fusion: Deep learning models can effectively fuse data from heterogeneous sensors (cameras, LiDAR, radar) at different levels (raw, feature, object-level) to overcome individual sensor limitations, provide more robust object lists (position, velocity, class, uncertainty), and enhance situational awareness. This directly relates to the Sensor Fusion for Situational Awareness point.

- 3D Reconstruction & Occupancy Grids: AI models can build detailed 3D representations of the environment, crucial for understanding free space and potential obstacles.

Reference link: https://www.nature.com/articles/s41598-025-91293-5

2. Planning: Deciding the Path Forward

AI’s Value Add:

- Behavior Prediction (Learning-based): While true long-term prediction is still nascent, ML models excel at predicting short-term behavior of other road users (e.g., predicting a pedestrian’s next few steps, another vehicle’s lane change intent) based on learned patterns from extensive real-world data. This significantly improves proactive decision-making.

- Scenario-based Decision Making: AI, sometimes using reinforcement learning or inverse reinforcement learning (though more common in simulation/research), can help learn optimal driving policies for complex, ambiguous scenarios that are difficult to hard-code (e.g., yielding in complex intersections, merging gracefully in dense traffic).

- Path Optimization: ML can optimize paths for comfort and fuel efficiency, beyond purely geometric considerations, by learning preferred human driving styles.

3. Control: Executing the Action

AI’s Value Add (Data-Driven Fine-Tuning):

Adaptive Control Parameters: ML can be used to adaptively tune the parameters of underlying deterministic physics-based controllers (e.g., PID controllers). By analyzing real-time vehicle dynamics and environmental conditions, ML models can learn to adjust control gains to achieve smoother, more precise, or more responsive maneuvers than fixed parameters would allow.

Improved Responsiveness & Nuance: ML allows for data-driven fine-tuning of deterministic control, providing more control bandwidth and enabling the system to react with greater nuance to dynamic changes, often leading to a more natural and comfortable driving experience.

End-to-End (E2E) Machine Learning AD Stacks

- Concept: The E2E system takes raw sensor inputs (e.g., camera images, LiDAR point clouds) and, often, a high-level navigational command, and directly outputs low-level control commands (steering angle, acceleration/deceleration).

- AI’s Role: A massive deep learning model learns the entire driving policy, including perception, prediction, and planning, directly from vast amounts of recorded driving data (often augmented with human driving actions). This approach aims to leverage the power of deep learning to find optimal, non-linear relationships across the entire driving task, potentially leading to more human-like and robust driving in complex scenarios.

- Advantages: Potentially simpler architecture, better handling of complex scenarios due to holistic learning, and more human-like driving.

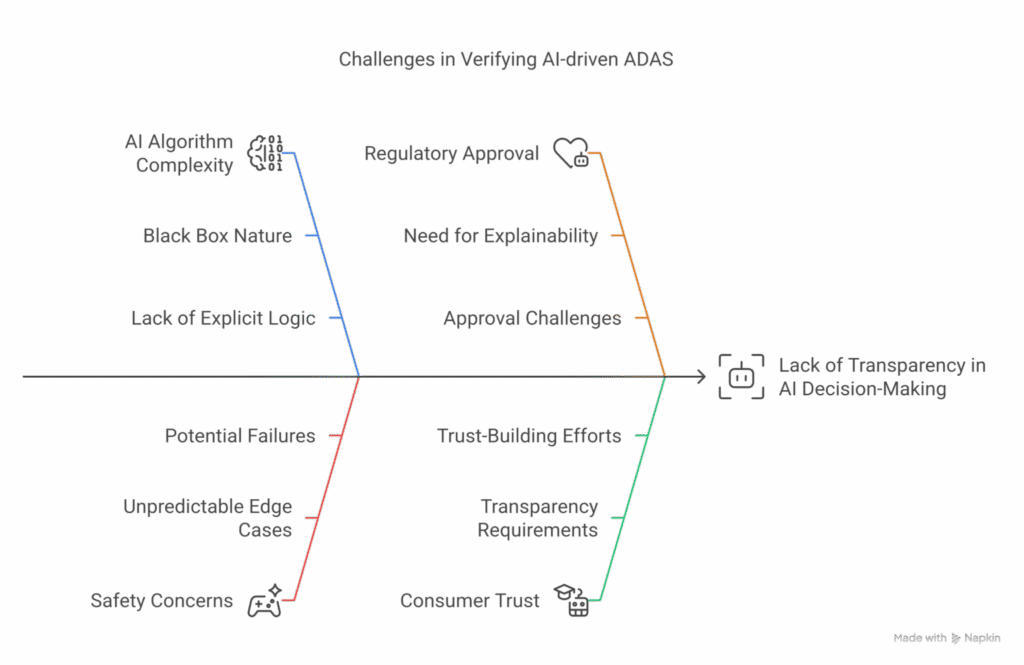

- Challenges: Explainability (black box), data hunger, difficulty in verification and validation, and ensuring robustness across all edge cases.

Challenges in AI-Driven ADAS: Performance, Verification & Validation

1. Data Quality, Quantity & Diversity

2. Data & Compute Needs

3. Environmental Variability

4. Algorithm Transparency and Explainability

5. Operational Design Domain (ODD) Coverage

- Scenario-based testing across the ODD spectrum

- Boundary condition analysis

- Onboard monitoring to detect and respond to ODD breaches

6. Edge Case Robustness

7. Safety and Cybersecurity

8. Regulatory and Ethical Considerations

The Path Forward: Best Practices for AI V&V in ADAS

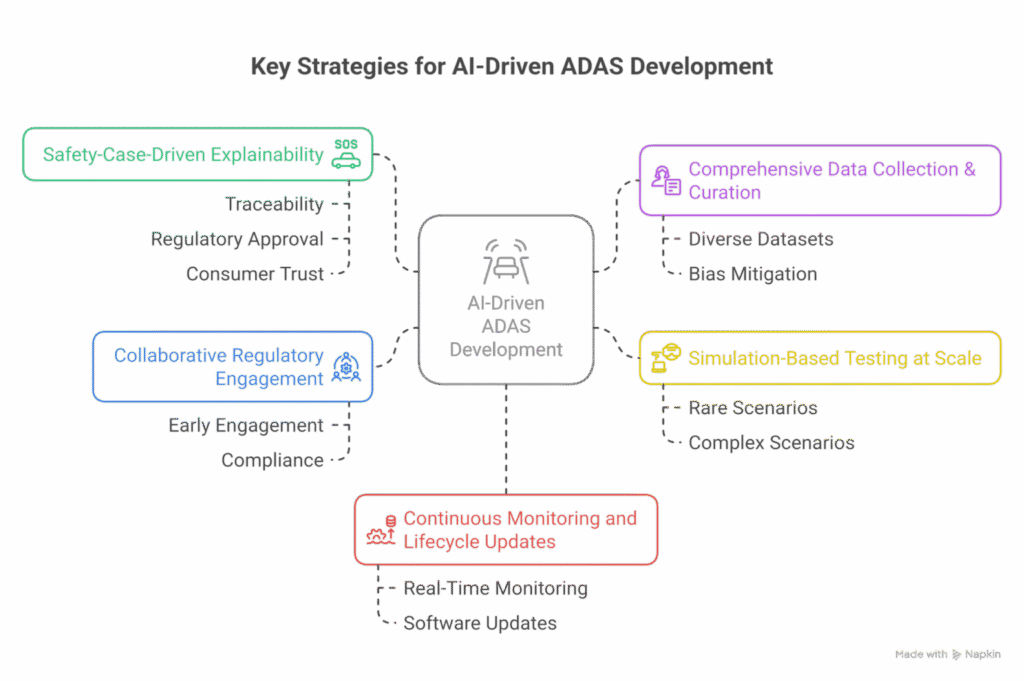

- Safety-Case-Driven Explainability

Embed explainable decision-making within the safety case, supporting traceability, regulatory approval, and consumer trust. - Comprehensive Data Collection & Curation

Develop diverse, representative datasets and mitigate bias to improve AI model generalization across all driving scenarios. - Simulation-Based Testing at Scale

Leverage virtual testing environments to simulate rare and complex scenarios not easily captured in real-world testing. - Collaborative Regulatory Engagement

Work with regulatory bodies from the early stages to shape and comply with evolving safety and performance standards. - Continuous Monitoring and Lifecycle Updates

Post-deployment, implement real-time monitoring and regular software updates to adapt to changing environments and new threats.

Conclusion

Author